Alert payload standardization: Improve AIOps alert correlation

Monitoring tools share alerts in a variety of formats, with inconsistent data points and crucial information missing. That leaves you and your team stuck in the middle, trying to analyze and act on incomplete or irrelevant alerts requiring lots of manual intervention, time, and energy to communicate and coordinate during incident response.

Standardizing your alert payloads is a key starting point if you want to improve your alert correlation. Better alert correlation then transforms chaotic noise into actionable insights and unlocks your full potential for predicting problems, automating responses, and ensuring smooth IT operations.

Read on to discover the step-by-step strategies to standardize your alert payloads, level-up your AIOps alert correlation, and enable proactive ITOps.

What is an alert payload in AIOps?

An ‘alert payload’ is the actual data or content of an alert generated by a monitoring tool or system. It contains specific information related to an incident, anomaly, or event.

The alert payload generally includes details like the name of the impacted resource, specific error messages, performance metrics, and other relevant information that helps IT operations teams understand and respond to the issue.

However, as you’re likely well aware, the content of an alert payload can vary significantly based on the source of the alert, the type of system being monitored, and the nature of the incident. This is why it’s critical to define standard formats for alerts and mandatory data points to ensure effective correlation, prioritization, and routing strategies for your IT operations.

Why is it important to standardize your alert payloads?

Alert payload standardization is the process of defining and enforcing a consistent structure for the information contained in alerts. It ensures that essential data, such as the affected service, owner, and priority, is included, making it easier for IT teams to respond effectively.

Key challenges without alert standardization:

- Lack of communication: Given monitoring teams and IT operations or NOC teams these different teams’ various tools, objectives, and data interpretations, some monitoring teams may not fully grasp the operational needs and context required for standardized alert payloads.

- Poor operational visibility: Sharing alerts across teams with different formats and missing context hinders proper communication and coordination during incidents.

- Delayed incident response and high MTTR: If key details are missing, it takes time to gather complete information before taking action, which extends downtime and can impact users.

- More outages: Without standardized alert payloads, inconsistencies in alert data can lead to overlooked issues and potentially result in more frequent service outages.

- Can’t easily identify or fix non-compliant alerts: Inconsistent or noncompliant alerts are challenging to manage with AIOps systems, making it essential to standardize payloads for efficient automation and response.

As a result, you can face suboptimal alerts that slow down incident response and delay response to emerging issues before they become serious or even lead to an outage.

Steps to standardize your alert payloads

Setting up standardized alert payloads is crucial for ensuring consistency and efficiency in IT operations. By following the steps outlined below, you’ll enable more streamlined automation and better comprehension of incident data.

Step 1: Collect business requirements

Before standardizing your alert payloads you’ll need to ensure that alerts align with the organization’s specific needs and priorities.

For instance, a financial institution’s business requirements may involve detecting unauthorized access to customer accounts. This means that their alert payloads might include specific user and account information to help security teams swiftly investigate and respond to potential breaches.

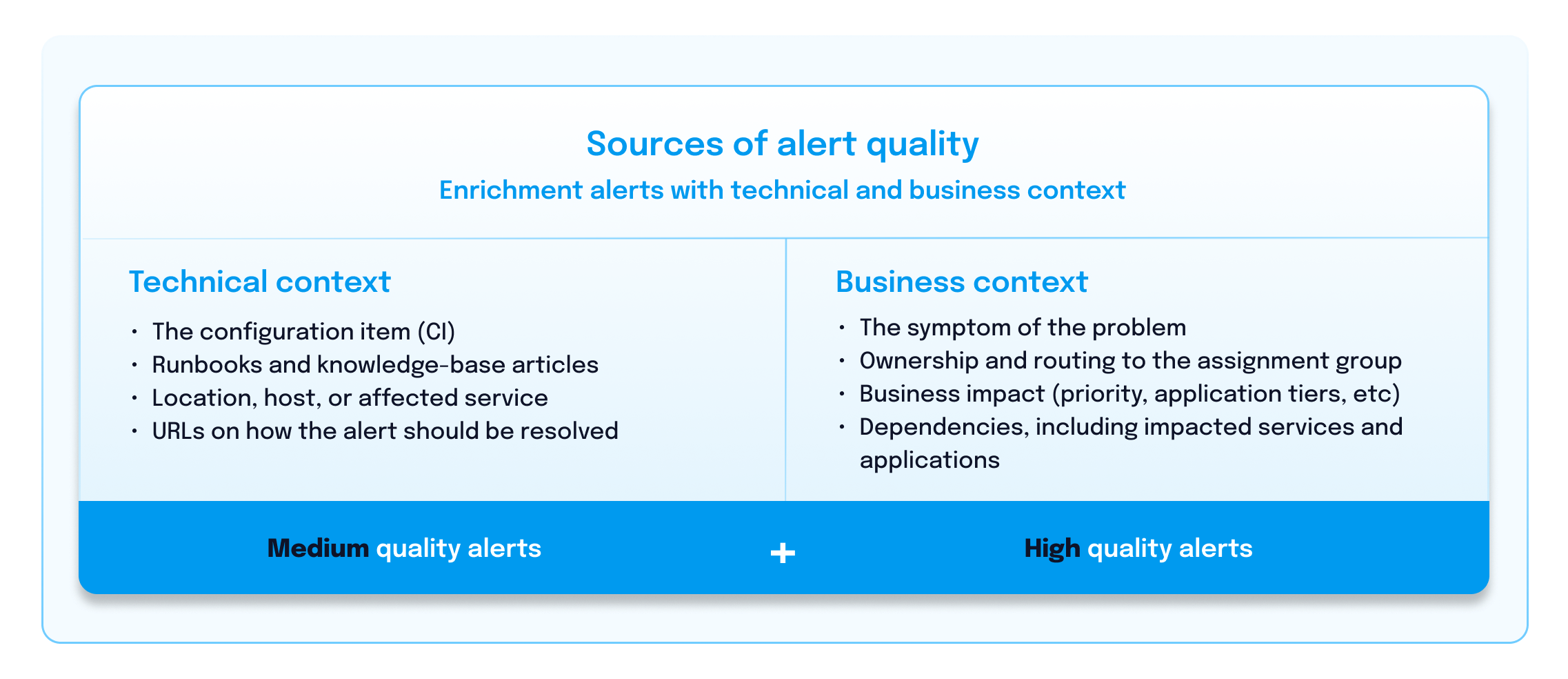

Step 2: Create actionable alerts

Make sure your organization is aligned on creating and defining actionable alerts. This means that your alerts provide clear guidance on what actions need to be taken. Alerts should contain both technical and business context to minimize confusion and increase incident response efficiency.

Take a cloud infrastructure environment, for instance. In this case, defining actionable alerts could mean that when CPU utilization exceeds a certain threshold, the alert payload includes the metric value and recommended actions, such as scaling resources or optimizing code, to address the performance issue immediately. This ensures that the operations team knows exactly what steps to take when the alert triggers.

Step 3: Enforce alert payload standards

NOC teams and incident management teams can enforce these standards by opening tickets or using the “misconfigured” tag to identify alerts that don’t adhere to the defined standards. While enforcement through shaming is not the ideal approach, it can sometimes be effective in driving compliance. Adhering to these standards can reduce the number of alerts that need escalation to higher-level engineers, ultimately saving costs and enhancing productivity.

Step 4: Ensure accurate alert threshold tuning

Be sure to consider the role of alert threshold tuning in your optimization process. Tuning the thresholds for alert generation ensures that alerts are meaningful and actionable. It involves setting the correct parameters so that alerts are triggered only when they signify real issues or potential problems so you can reduce false positives.

This fine-tuning is essential to balance between being alerted too often and not soon enough. Reduce alert noise from outdated thresholds by reviewing and correctly setting your thresholds. This ensures that the alerts that come through are relevant and require attention, making the process of correlation and prioritization more effective.

This step is critical in dynamic, agile IT environments where the normal behavior of systems can change over time and with personnel changes, necessitating regular threshold reviews and adjustments.

Step 5: Correlate and prioritize alerts

Meet with your NOC or incident management team to discuss where to start with building correlation and prioritization strategies. These teams have valuable insights into incident evolution and can help define which alerts should be correlated and how incidents should be prioritized. Alert correlation is critical for your alert payload standardization. That’s because it can:

- Reduce your alert volume: Correlation helps reduce the noise and volume of alerts by grouping related incidents logically. By bundling alerts with similar characteristics or impacts, you can significantly reduce the workload on your IT teams and prioritize the most critical issues.

- Ensure more proactive monitoring. Correlation allows you to proactively identify patterns and anomalies in your alerts, ensuring you don’t miss critical incident signals.

- Get to the root cause faster: It’s important to quickly get to the root cause of an issue and let your teams triage from there. Effective correlation helps IT teams quickly identify the source of problems, enabling faster resolution and minimizing downtime.

Being able to reliably and effectively conduct alert correlation creates a foundation for ITOps automation. Take it from BigPanda Senior Adoption Advisor, Brady Pannabecker, who shared during our podcast on What It Takes To Make Automation Work In ITOps.

“If you automated something with no correlation, you’re just moving the volume from one place to another.”

– Brady Pannabecker, Senior Adoption Advisor.

Step 6: Identify ownership with prioritization and routing

Prioritization and routing go hand in hand. That’s why IT leaders must consider ownership and the roles of different teams in incident response. Prioritization should be based on historical data and impact analysis to promptly address the most critical issues.

Effective routing allows alerts to quickly reach the right teams or individuals for resolution, minimizing response times and improving overall incident management.

Step 7: Let go of CMDB perfection

There is great value in setting a standard for alert quality, even in the absence of a complete Configuration Management Database (CMDB). While a CMDB serves as a central hub for data, you don’t have to store every type of asset information in your CMDB exclusively.

In fact, you can use AIOps to bring in this additional asset information, enrich the data, and remove the need for perfection in your CMDB. The more information and context provided to an incident, the better.

Step 8: Take action

Taking action and not getting stuck in decision paralysis is the most critical step. Even if you take a small step like standardizing your alert payloads or setting thresholds more effectively, the journey to better alert correlation and smoother ITOps begins with action.

Start with making practical improvements to your alert payloads now. This sets the stage for further discussions on enhanced IT automation and AIOps capabilities.

Go from better alert payloads to correlation with BigPanda

BigPanda AIOps products provide robust features for standardizing alert payloads and enhancing alert correlation for more proactive ITOps.

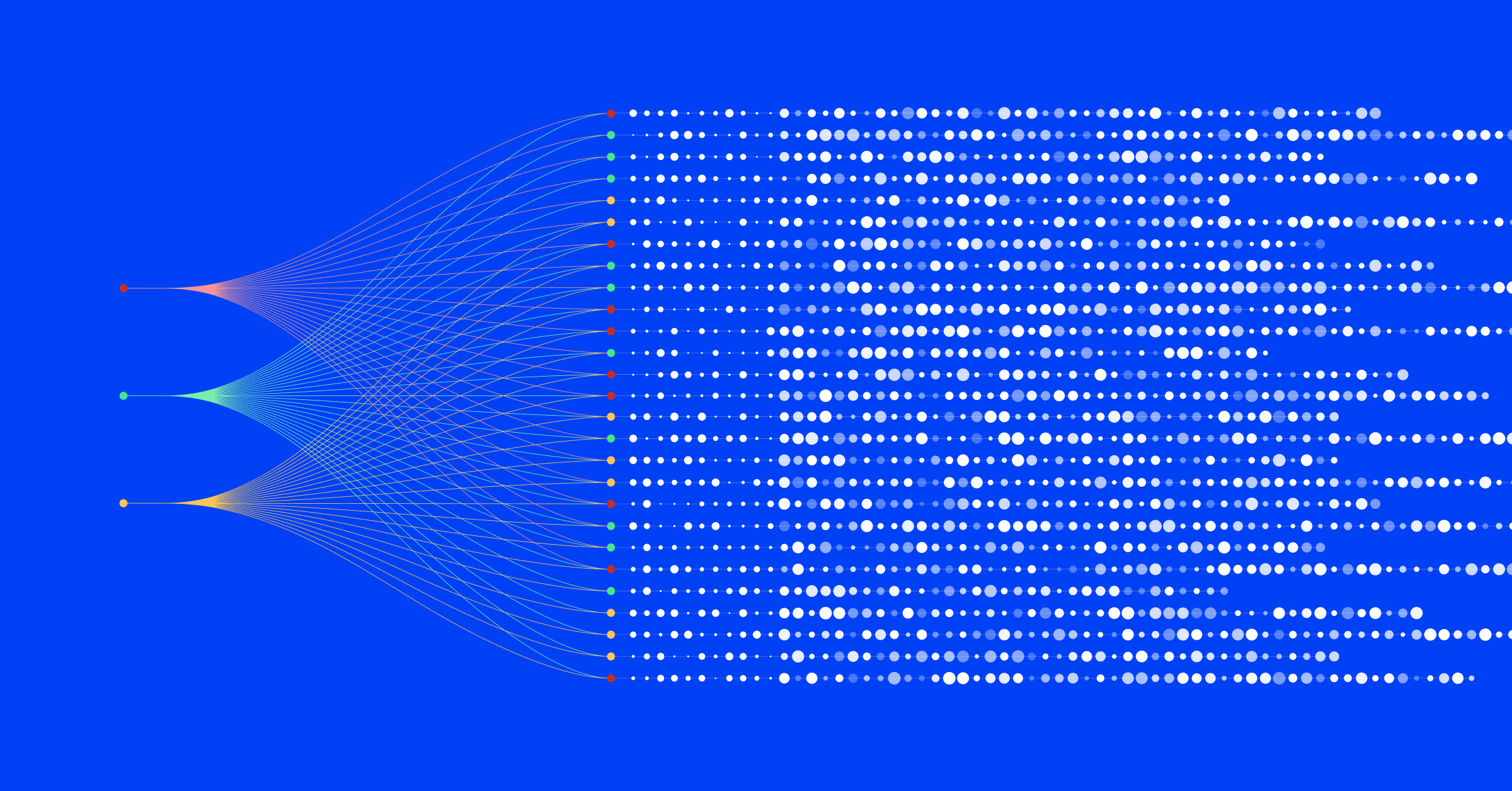

Our advanced algorithms and machine learning capabilities allow for the consolidation of diverse alert data into a unified format, ensuring seamless integration and streamlined processing.

- Actionable alerts at your fingertips: Alert Intelligence from BigPanda distills millions of events into contextualized, actionable, and high-quality intelligent alerts in a single pane of glass.

- Improve the actionability of alerts: Leverage BigPanda Unified Analytics dashboards to elevate the actionability of alerts. Sort notifications by their quality to fine-tune alert configurations, filter unnecessary noise, and enhance monitoring and observability strategies.

- Automated alert correlation: Operator-defined or ML-suggested correlation patterns bundle alerts from different components within the IT environment to determine if seemingly isolated alerts are interconnected and part of the same incident.

- AI-driven root cause analysis: BigPanda AI automatically identifies the underlying reason for issues or incidents in complex and dynamic IT environments by Automated Incident Analysis and Root Case Changes to deliver faster incident resolution.

Try a demo for yourself and see how BigPanda facilitates better correlation, analysis, and automated response to significantly improve your operational workflows and IT decision-making.